Learning Mid-level Filters for Person Re-identification

Rui Zhao Wanli Ouyang Xiaogang Wang

The Chinese University of Hong Kong

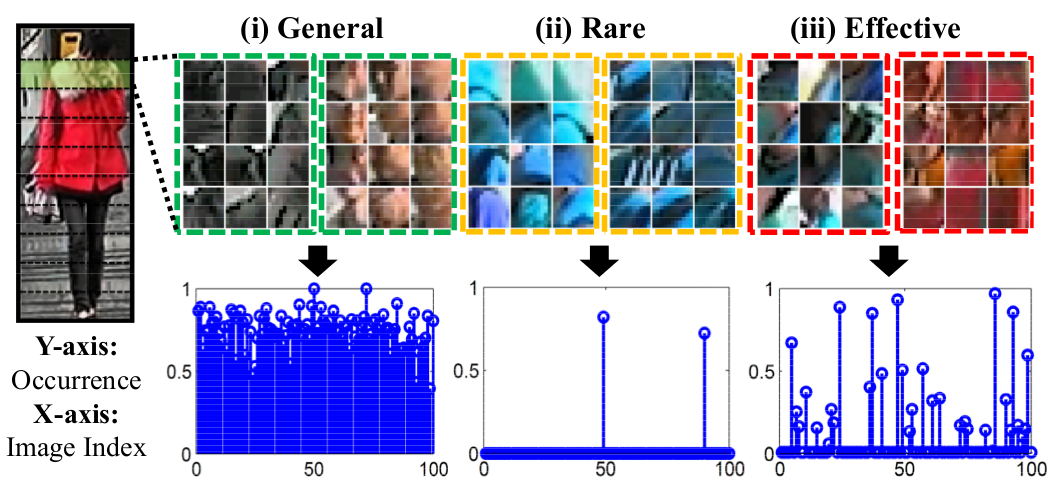

Figure 1. Three types of patches with different discriminative and generalization powers. Each dashed box indicates a patch cluster. To make filters region-specific, we cluster patches within the same horizontal stripe across different pedestrian images. See details in the text of Section 1 of our paper.

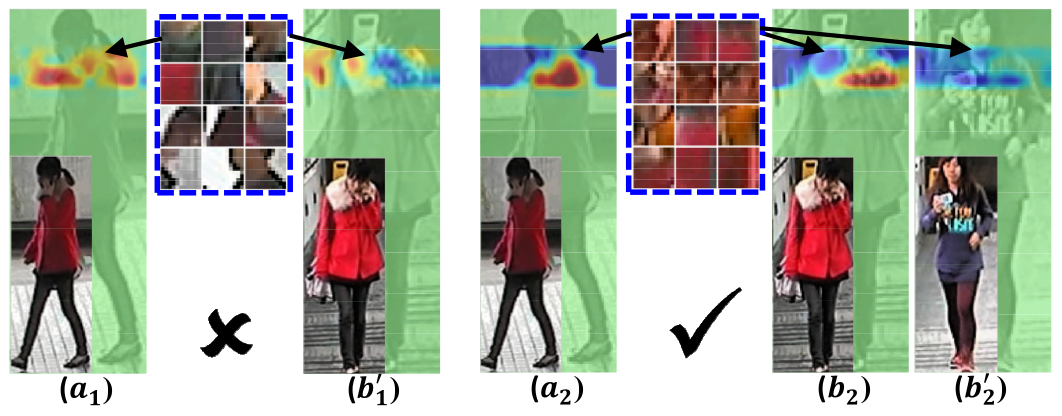

Figure 2. Filter in (a1 )(b1 ) is learned from a cluster with incoherent appearance and generates scattered responses in the two images. Filter in (a2)(b2) is learned from a cluster with coherent appearance. It generates compact responses. It also has view-invariance. It matches (a2) and (b2) which are the same person in different views, while distinguishes (b2) and (b'2) which are different person in the same view.

Abstract

In this paper, we propose a novel approach of learning mid-level filters from automatically discovered patch clusters for person re-identification. It is well motivated by our study on what are good filters for person re-identification. Our mid-level filters are discriminatively learned for identifying specific visual patterns and distinguishing persons, and have good cross-view invariance. First, local patches are qualitatively measured and classified with their discriminative power. Discriminative and representative patches are collected for filter learning. Second, patch clusters with coherent appearance are obtained by pruning hierarchical clustering trees, and a simple but effective cross-view training strategy is proposed to learn filters that are view-invariant and discriminative. Third, filter responses are integrated with patch matching scores in RankSVM training. The effectiveness of our approach is validated on the VIPeR dataset and the CUHK Campus dataset. The learned mid-level features are complementary to existing handcrafted low-level features, and improve the best Rank-1 matching rate on the VIPeR dataset by 14%.

Papers

-

Learning Mid-level Filters for Person Re-Identfiation,

R. Zhao, W. Ouyang and X. Wang.

IEEE CVPR , 2014. (Acceptance rate: 29.8%)

[PDF] [Bibtex] [Project Page] [Poster]

Spotlight

Highlights

-

A novel measurement called partial AUC (pAUC) score is proposed to quantify the discriminative power in person re-identification for image patches.

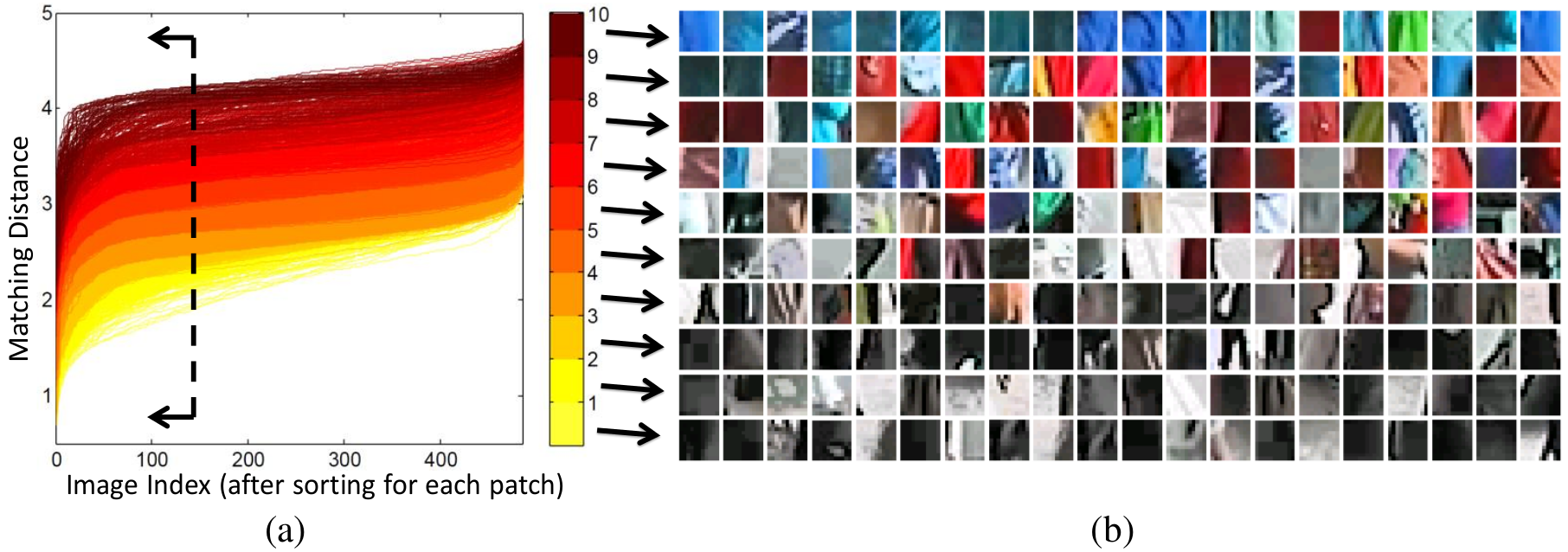

Figure 3. (a): Each curve represents the sorted distances between a patch and patches in its nearest-neighbor set, and pAUC score is computed by accumulating patch matching distances with the Np closest nearest neighbors, as the black arrows indicated. Different color indicates different pAUC level. (b): Example patches are randomly sampled from each pAUC level for illustration, patches in low pAUC levels are monochromatic and frequently seen, while those in high pAUC levels are varicolored and less frequently appeared. Clearly the examples show the effectiveness of the pAUC score in quantifying the discriminative power.

-

Coherent patch clusters are obtained by pruning hierarchical clustering trees.

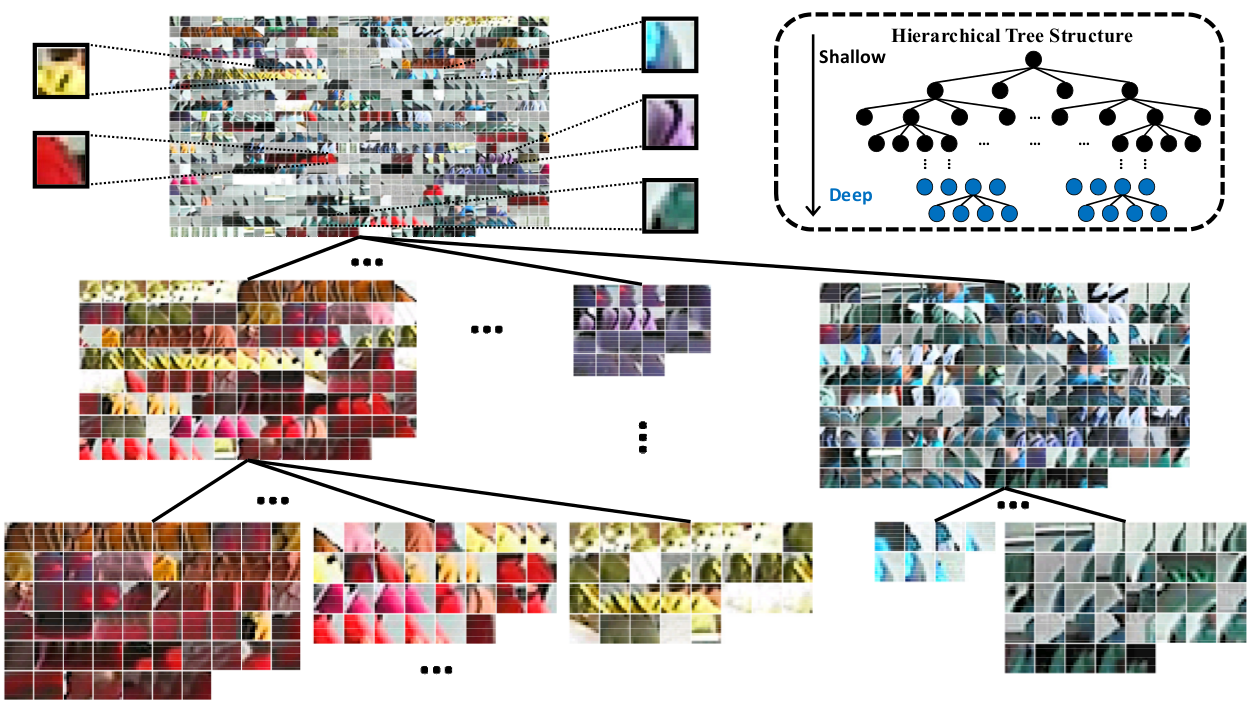

Figure 4. Illustration of hierarchical clustering tree structure, and examples of cluster nodes. As shown in the dashed box, patches in a parent node is divided into Ot = 4 children nodes, and shallow nodes (in black color) are decomposed into deep nodes (in blue color) in hierarchical clustering. The shallow nodes represent coarse clusters while the deep nodes denote finer clusters. Shallow nodes contain patches with different color and texture patterns while the patch patterns in the deep nodes are more coherent.

-

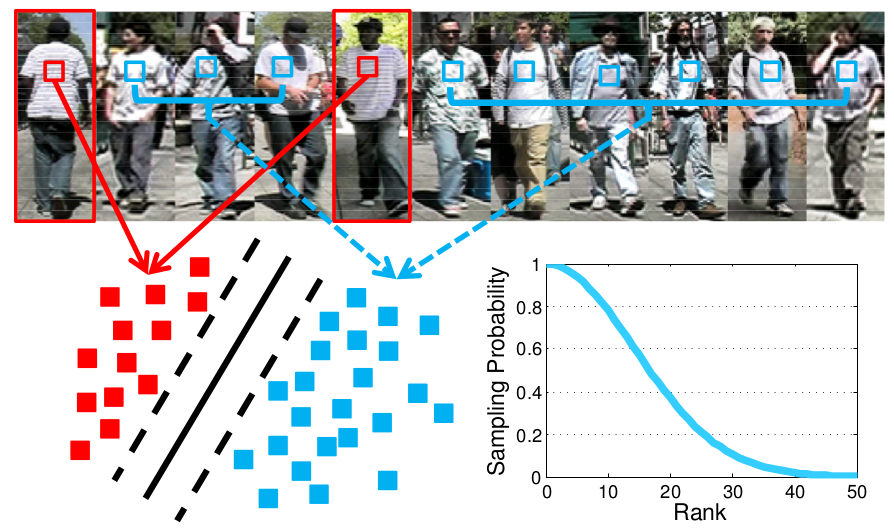

A simple but effective cross-view training strategy is propose to learn filters that are view invariant and discriminative in distinguishing identities

Figure 5. Scheme of learning view invariant and discriminative filters. Patches in red boxes are matched patches from images of the same person, while those in blue boxes are matched patches in most confusing images. Bottom right is the probability distribution for sampling auxiliary negative samples.

Downloads

-

dense_feat : MATLAB package of dense color histogram and SIFT feature extraction.

-

CMC : CMC curves on both the VIPeR dataset and the CUHK01 dataset.

-

Code : Matlab Code with demo for the CUHK01 dataset.

Reference

-

Person Re-Identification: System Design and Evaluation Overview. X. Wang, and R. Zhao. Person Re-identification, Springer 2014.

-

Learning Mid-level Filters for Person Re-identification. R. Zhao, W. Ouyang, and X. Wang. CVPR 2014.

-

DeepReid: Deep Filter Pairing Neural Network for Person Re-identification. W. Li, R. Zhao, T. Xiao, and X. Wang. CVPR 2014.

-

Person Re-identification by Salience Matching. R. Zhao, W. Ouyang, and X. Wang. ICCV 2013.

-

Unsupervised Salience Learning for Person Re-identification. R. Zhao, W. Ouyang, and X. Wang. CVPR 2013.

-

Human Re-identification with Transferred Metric Learning. W. Li, R. Zhao, and X. Wang. ACCV 2012.